400GE Technology and Research Network

Transcript of 400GE Technology and Research Network

#CLUS

Tae Hwang, Architect at Cisco@TaeHwang16Supercomputing19 in Denver, CO

400GE Technology and Research Network

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

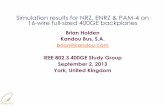

400G

1G

2000

25/50G/100G

2017

10G

40G /100G

20142003 2010 2018

Speed of technology transition is accelerating

Built for the most demanding

environments

Built for customer choice and

flexibility

Built to last

400G TodayThe next frontier for Cloud, Research, and HPC Networking

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

100 GE to 400 GE

• 100 Gbps Ethernet standard being based on four parallel lanes of 25 Gbps,

• A method to increase lane data rates to 50 Gbps was clearly needed for 400 Gbps

• 16 x 25Gbps would require 32 fibers

• 4-Level Pulse Amplitude Modulation (PAM4) from NRZ.

Source: accton.com

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Ethernet PHY variants

Name Medium Tx Fibers Lanes Reach Encoding

400GBASE-SR16 MMF 16 16 x 25 Gbps 70 m (OM3)100 m (OM4) NRZ

400GBASE-DR4 SMF 4 4 x 100 Gbps 500 m PAM4

400GBASE-FR8 SMF 1 8 x 50 Gbps (WDM) 2 km PAM4

400GBASE-LR8 SMF 1 8 x 50 Gbps (WDM) 10 km PAM4

400GBASE-ZR SMF 1 8 x 50 Gbps (WDM) 100 km 16QAM

200GBASE-DR4 SMF 4 4 x 50 Gbps 500 m PAM4

200GBASE-FR4 SMF 1 4 x 50 Gbps (WDM) 2 km PAM4

200GBASE-LR4 SMF 1 4 x 50 Gbps (WDM) 10 km PAM4

400G Direct Attach Cables – 1m, 2m, 3m

400G Active Optical Cables – 1m, 3m, 5m, 7m, 10m, 15m, 20m, 25m, 30m

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Latency - Let’s break it down

Source server

Application and its Data

Operating system

TCP stack

NIC driver

NIC hardware

Destination server

Application and its Data

Operating system

TCP stack

NIC driver

NIC hardware

NetworkDevice

Port B

NetworkDevice

Port A Port A Port B

Port-to-Port Latency

NIC Latency NIC Latency

OS and TCP Stack Latency

Application Latency

Cable Distance Latency

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

TCP/IP

Kernel Overhead

9.42 usecs

App to App Latency Factors

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Ethernet is Slow for Fabric…?

• Is it true? But packets are forwarded by ASICs• Nexus 35xx port-to-port latency = 190ns, • Mellanox SB7700 Port-to-Port = 90ns• Mellanox CS7520 Port-to-Port = 400ns

• Ethernet uses Store-and-Forward, now many use Cut-through-Switching https://www.cisco.com/c/en/us/products/collateral/switches/nexus-5020-switch/white_paper_c11-465436.html

• People means TCP is slow

• Bandwidth a generation behind compared to Infiniband?

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Infiniband Network Stack From a Host Perspective

Infiniband leverages Kernel Bypass for it does not use OS TCP/IP Stack.

Source: mellanox.com

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Infiniband – RDMA Based Technology

• Zero-copy - applications can perform data transfers without the involvement of the network software stack. Data is sent and received directly to the buffers without being copied between the network layers.

• Kernel bypass - applications can perform data transfers directly from user-space without kernel involvement.

• No CPU involvement - applications can access remote memory without consuming any CPU time in the remote server. The remote memory server will be read without any intervention from the remote process (or processor). Moreover, the caches of the remote CPU will not be filled with the accessed memory content.

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

RDMA over Converged Ethernet (RoCE)

Customer app

MPI middleware

Network hardware/Ethernet

*Existing Infiniband based HPC applications do not need modifications

Open Fabric Enterprise Distribution

RDMA supports zero-copy networking by enabling the network adapter to transfer data directly to or from application memory, eliminating the need to copy data between application memory and the data buffers in the operating system.

The RoCEv2 protocol exists on top of either the UDP/IPv4 or the UDP/IPv6 protocol.[2] The UDP destination port number 4791 has been reserved for RoCE v2.[10]

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Terabit Scaling Switch

Nexus 3400S:

• Two 12.8T high density 400GE & 100GE switches for web/ hyperscale/HPC customers• 12.8T fixed and semi-modular option for

flexible 100/400G port configurations• Key Differentiator:

• Lowest power and best latency in industry

Cost-efficiently build 100G/400G Fabric

Nexus 9300GX: • Two 400GE enabled leaf & spine switches

for large enterprises & SP customers to extend power of ACI to 400G

• ACI leaf & spine functionality in same switch

• Key Differentiators: • Best route scale in industry• Full data and system telemetry capabilities

(no sampled data)• Only 400G silicon with SRv6 forwarding at line

rate

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Small Enough?

• 128 x 100GE or 168 x 50GE*• No Hop. • No oversubscription • Best performance

128 x 100G 96 x 100G

8 x 400G

96 x 100G

• 192 x 100GE or 328 x 50GE*• One Hop• 3:1 oversubscription

Nexus 3400-S has 168 logical port limitation today

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

Small Enough?

• 288 x 100GE or 456 x 50GE• One Hop• 3:1 oversubscription

96 x 100G

4 x 400G

96 x 100G

4 x 400G

96 x 100G

4 x 400G

Or 152 x 50G Or 152 x 50G

Or 152 x 50G

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

64 x 100G

Designing 400G Fabric Without Oversubscription

2 x 400GE

Leaf1

N3432D-S N3432D-S

64 x 100G

2 x 400GE

N3432D-S N3432D-S

64 x 100G

64 servers per leaf x 16 leaf = 1024 x 100G servers128 servers per leaf x 16 leaf = 2048 x 50G servers

2 x 400GE2 x 400GE

Leaf2 Leaf16

Or 128 x 50G Or 128 x 50G Or 128 x 50G

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

One ASIC Design – Why is it important?

Modular switch (or Director level switch) is essentiallyLeaf (module) and spine (Fabric module)

Semi-modular or one-ASIC design, there is no hop. Every port is directly connected to ASIC.A module serves as “connector” – cost saving!

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public#CLUS

I2/Internet/AWS

Co-location site/Data Center - IP Routing Domain 4

400GE

Campus site 1 – IP Routing Domain 1

Campus site 2 – IP Routing Domain 2 Campus site 3 – IP Routing Domain 3

100GE

MDF

Core

MDF

Core

Dark Fiber1 x 400G

Core Core

400GE Direct Connect

LR

LR

ZR

ZRLR

Streaming Telemetry with ASIC

NXOSSUP

ASIC

COPP Rate Limiter

SW Telemetry: Transport

Encode as GPB

BDC/HDC Parser

Docker DaemongRPC

gRPCServer

on-demand subscription gRPC Server

on-demand subscription gRPC Client

JSON

JSONExternal Receiver

Application

Captured and forwarded: High Delay/Buffer Drop Packets

Header and Flow info