2003.03.17 - SLIDE 1IS246 - SPRING 2003 Lecture 15: Automated Analysis: Video IS246 Multimedia...

-

date post

21-Dec-2015 -

Category

Documents

-

view

213 -

download

0

Transcript of 2003.03.17 - SLIDE 1IS246 - SPRING 2003 Lecture 15: Automated Analysis: Video IS246 Multimedia...

2003.03.17 - SLIDE 1IS246 - SPRING 2003

Lecture 15: Automated Analysis: Video

IS246Multimedia Information

(FILM 240, Section 4)

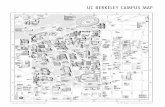

Prof. Marc DavisUC Berkeley SIMS

Monday and Wednesday 2:00 pm – 3:30 pmSpring 2003

http://www.sims.berkeley.edu/academics/courses/is246/s03/

2003.03.17 - SLIDE 2IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 3IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 4IS246 - SPRING 2003

Low-Level Audio Descriptors

• Silence, voice onset (Aarons) • Speech/music (Schierer)• Rough spectral features (musclefish)

– Loud/soft, bright/dark, low/high

• Musical pitch and timbre • Speech emphasis/prosody (Chen, Aarons)• Characteristic audio events

– Cheers, gunshots (Pfeiffer)

• Musical tempo (Cook & Tzanetakis)– Rhythmic strength?

• Fine spectral features– Spectrogram, Mel-frequency cepstral coefficients

2003.03.17 - SLIDE 5IS246 - SPRING 2003

Speech Recognition

• Ideally, bridges the “semantic gap”• One little problem:

– IT DOESN’T WORK!

• Spectrum of reliability– Known vs. unknown speaker (+ training)– Known or small vs. unknown vocabulary (+ training)– Read text vs. conversational speech– Standard English vs. dialects, accents, other

languages– Close microphone vs. distant– Clean, quiet vs. mixed or noisy conditions– Known acoustic channel vs. telephone

2003.03.17 - SLIDE 6IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 7IS246 - SPRING 2003

Signal-Based Parsing

• Practical problem– Parsing unstructured, unknown video is very,

very hard

• Theoretical problem– Mismatch between percepts and concepts

2003.03.17 - SLIDE 8IS246 - SPRING 2003

Perceptual/Conceptual Issue

Clown Nose Red Sun

Similar Percepts / Dissimilar Concepts

2003.03.17 - SLIDE 9IS246 - SPRING 2003

Perceptual/Conceptual Issue

Car Car

Dissimilar Percepts / Similar Concepts

John Dillinger’s Timothy McVeigh’s

2003.03.17 - SLIDE 10IS246 - SPRING 2003

Signal-Based Parsing

• Effective and useful automatic parsing

– Video• Scene break detection

• Camera motion analysis

• Low level visual similarity

• Feature tracking

– Audio• Pause detection

• Audio pattern matching

• Simple speech recognition

• Approaches to automated parsing

– At the point of capture, integrate the recording device, the environment, and agents in the environment into an interactive system

– After capture, use “human-in-the-loop” algorithms to leverage human and machine intelligence

2003.03.17 - SLIDE 11IS246 - SPRING 2003

Image/Video Analysis Communities

• Research and development– Academia

• MIT, CMU, GeorgiaTech, UC Berkeley, Columbia University

– Government• NSA, CIA, FBI, etc.

– Industry• Media, retail, training, consumer electronics, surveillance,

industrial automation, libraries

• Research directions– Computer vision, robotics, knowledge representation,

digital libraries, media asset management, production automation

2003.03.17 - SLIDE 12IS246 - SPRING 2003

Major Areas

• Image analysis– Color similarity– Texture similarity– Shape similarity– Spatial similarity– Object presence analysis

• Video analysis– Temporal segmentation– Content and motion analysis

2003.03.17 - SLIDE 13IS246 - SPRING 2003

Semantic Gap

• Most of the disappointments with early retrieval systems come from the lack of recognizing the existence of the semantic gap and its consequences for system set-up

• The semantic gap is the lack of coincidence between the information that one can extract from the visual data and the interpretation that the same data have for a user in a given situation

2003.03.17 - SLIDE 14IS246 - SPRING 2003

Sensory Gap

• The sensory gap is the gap between the object in the world and the information in a (computational) description derived from a recording of that scene

• The sensory gap makes the description of objects an ill-posed problem: – It yields uncertainty in what is known about the state of the

object– The sensory gap is particularly poignant when a precise

knowledge of the recording conditions is missing– The 2D-records of different 3D-objects can be identical—without

further knowledge, one has to decide that they might represent the same object

– A 2D-recording of a 3D-scene contains information accidental for that scene and that sensing but one does not know what part of the information is scene-related

2003.03.17 - SLIDE 15IS246 - SPRING 2003

At the End of the Early Years

• “Computer vision researchers should identify features required for interactive image understanding, rather than their discipline’s current emphasis on automatic techniques (1992)”

2003.03.17 - SLIDE 17IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 18IS246 - SPRING 2003

Image Similarity

• Extraction of features or image signatures from the images– Efficient representation and storage strategy for this

precomputed data

• A set of similarity measures– Capture some perceptively meaningful definition of similarity– Efficiently computable when matching an example with the

whole database

• A user interface for– The choice of which definition(s) of similarity should be applied

for retrieval– The ordered and visually efficient presentation of retrieved

images– The supporting relevance feedback

2003.03.17 - SLIDE 19IS246 - SPRING 2003

Image Similarity

• Color similarity– Choice of color space matters

• Texture similarity– Specific textures can be parsed well

• Shape similarity– Scale, rotation, and deformation affect shape

• Spatial similarity– Relative vs. absolute positions and distances

• Object presence analysis– Face detection/recognition is active area

2003.03.17 - SLIDE 20IS246 - SPRING 2003

Object Segmentation

• “Object segmentation for broad domains of general images is not likely to succeed, with a possible exception for sophisticated techniques in very narrow domains.”

2003.03.17 - SLIDE 22IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 23IS246 - SPRING 2003

Video Analysis

• Temporal segmentation– Shot boundary detection

• Camera work and object motion analysis– Pan and zoom detection– Object tracking

• Framing and focus

• Video soundtrack analysis

• Video scene analysis– Video “paragraphing” in restricted domains

2003.03.17 - SLIDE 24IS246 - SPRING 2003

Temporal Segmentation

• Shot change properties– Motion

• Camera motion• Object motion

– Image• Luminosity• Color• Noise

– Type• Abrupt (cut)• Progressive (dissolve, fade, wipe)

• Shot change detection methods– Difference in statistical signatures of image change– Explicit modeling of motion

2003.03.17 - SLIDE 25IS246 - SPRING 2003

Video Analysis

• Video abstraction and representation– Video icon construction– Video key-frame extraction– Video skimming

• Shot similarity and content-based retrieval• Visual presentation and annotation for

video• Video indexing and visual cataloguing• Computer-assisted video annotation and

transcription

2003.03.17 - SLIDE 27IS246 - SPRING 2003

Video Similarity

• Query: – Retrieve a video segment of “a hammer hitting a nail

into a piece of wood”

• Sample results:– Video of a hammer hitting a nail into a piece of wood– Video of a hammer, a nail, and a piece of wood– Video of a nail hitting a hammer, and a piece of wood– Video of a sledgehammer hitting a spike into a

railroad tie– Video of a rock hitting a nail into a piece of wood– Video of a hammer swinging– Video of a nail in a piece of wood

2003.03.17 - SLIDE 28IS246 - SPRING 2003

Types of Video Similarity

• Semantic– Similarity of descriptors

• Relational– Similarity of relations among descriptors in

compound descriptors

• Temporal– Similarity of temporal relations among

descriptors and compound descriptors

2003.03.17 - SLIDE 29IS246 - SPRING 2003

Retrieval Examples to Think With

• “Video of a hammer, a nail, and a piece of wood”– Exact semantic and temporal similarity, but no relational

similarity

• “Video of a nail hitting a hammer, and a piece of wood” – Exact semantic and temporal similarity, but incorrect relational

similarity

• “Video of a sledgehammer hitting a spike into a railroad tie”– Approximate semantic similarity of the subject and objects of the

action and exact semantic similarity of the action; and exact temporal and relational similarity

• “Video of a hammer swinging” cut to “Video of a nail in a piece of wood”

2003.03.17 - SLIDE 30IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 31IS246 - SPRING 2003

Discussion Questions

• On “Content-Based Representation and Retrieval of Visual Media: A State-of-the-Art Review ” (Risto Sarvas)– Are these technologies implemented in current camcorders

and editing software? It seems that with these technologies (e.g., video parsing & video abstraction) a simple functionality like automatically cutting the video into shots wouldn't be too difficult.

– How much of these technologies are available for use? For example, are some of the image similarity algorithms available as open source software?

– The reading focused on analyzing media content after the recording. How much there is technology for letting the human analyze the content as it is produced? In other words, what is the state-of-the-art in standardizing content annotation?

2003.03.17 - SLIDE 32IS246 - SPRING 2003

Discussion Questions

• On “Content-Based Representation and Retrieval of Visual Media: A State-of-the-Art Review ”(Catherine Lai)– While much effort has been spent on discussing the

process or conditions of content-based image retrieval, what constitutes a valid evaluation method? How large should the image databases be and who decides what should be included in the image databases?

– How can user feedback be exploited to improve user interaction and techniques for image browsing?

2003.03.17 - SLIDE 33IS246 - SPRING 2003

Discussion Questions

• On “Content-Based Image Retrieval at the End of the Early Years” (Ana Ramirez)– To what degree do users have to be experts

in computer vision to interactively annotate media or query for media?

– How is the semantic gap for time-based media different than for static media?

2003.03.17 - SLIDE 34IS246 - SPRING 2003

Discussion Questions

• On “Content-Based Image Retrieval at the End of the Early Years” (Ping Yee)– Consider the various types of queries that are

typically possible in content-based image retrieval systems:

• Query by spatial predicate• Query by image predicate• Query by group predicate• Query by spatial example• Query by image example• Query by group example

– Which of these queries are likely to be applicable to retrieval for digital video? In what situations might such queries be made?

2003.03.17 - SLIDE 35IS246 - SPRING 2003

Today’s Agenda

• Review of Last Time

– Automated Analysis: Audio

• Automated Analysis: Video

– Signal-Based Parsing

– Image Analysis

– Video Analysis

– Discussion Questions

• Action Items for Next Time

2003.03.17 - SLIDE 36IS246 - SPRING 2003

Presentations on Wednesday

• Every group will have 15 minutes in class on Wednesday, March 19, 2003, to present their work on Assignment 2

• Your presentation must include– Short Plot Outline– Showing of your movie– Discussion of at least one practical and one

theoretical issue you dealt with– Lessons learned– Time for questions

2003.03.17 - SLIDE 37IS246 - SPRING 2003

Hand In

• Drop off the following files in your designated group drop-off box under M:/is246/assignment2/drop_off/groupX– Final edit

• Format: MPEG

– Presentation• Format: MS PowerPoint or html

– Paragraph Answering One or More of the Questions For Thought

• Format: txt, MS Word, or html