2 Intelligent Agents

-

Upload

shatria-kaniputra -

Category

Documents

-

view

244 -

download

4

Transcript of 2 Intelligent Agents

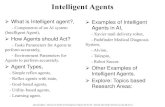

Intelligent Agents

Chapter 2Team Teaching AI (created by Dewi Liliana)

PTIIK 2012

OutlineAgents and environmentsRationalityPEAS (Performance measure, Environment,

Actuators, Sensors)Environment typesAgent types

AgentsAn agent is anything that can be viewed as

perceiving its environment through sensors and acting upon that environment through actuator

Human agent: eyes, ears, and other organs for sensors; hands, legs, mouth, and other body parts for actuators

Robotic agent: cameras and infrared range finders for sensors; various motors for actuators

Agents and environments

The agent function maps from percept histories to actions:

[f: P* A]The agent program runs on the physical architecture

to produce fagent = architecture + program

Vacuum-cleaner world

Percepts: location and contents, e.g., [A,Dirty]

Actions: Left, Right, Suck, NoOp

A vacuum-cleaner agent

Rational agentsRational do the right thing

Based on what it can perceive and the actions it can perform.

The right action is the one that will cause the agent to be most successful

Performance measure: An objective criterion for success of an agent's behavior

E.g., performance measure of a vacuum-cleaner agent:Amount of dirt cleaned up, Amount of time taken, Amount of electricity consumed, Amount of noise generated, etc.

Goal among many objectives, pick one objective to become the main focus of agent

Contoh Goal Performance Measure

Lulus Kuliah IPKKaya Gaji bulananJuara liga sepakbola Posisi

klasemenBahagia Tingkat

kebahagiaan

Rational agentsRational Agent: For each possible percept

sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.

Rational agentsRationality is distinct from omniscience (all-

knowing with infinite knowledge)Agents can perform actions in order to

modify future percepts so as to obtain useful information (information gathering, exploration)

An agent is autonomous if its behavior is determined by its own experience (with ability to learn and adapt)

PEASPEAS: Performance measure, Environment,

Actuators, SensorsMust first specify the setting for intelligent agent

designConsider, e.g., the task of designing an automated

taxi driver:Performance measure (kinerja)Environment (tingkungan, suasana)Actuators (tingkah laku)Sensors

PEASPEAS: Performance measure, Environment,

Actuators, SensorsMust first specify the setting for intelligent agent

designConsider, e.g., the task of designing an automated

taxi driver:Performance measure: Safe, fast, legal, comfortable

trip, maximize profitsEnvironment: Roads, other traffic, pedestrians,

customersActuators: Steering wheel, accelerator, brake, signal,

hornSensors: Cameras, sonar, speedometer, GPS,

odometer, engine sensors, keyboard

PEASAgent: Medical diagnosis systemPerformance measure: Healthy patient,

minimize costs, lawsuitsEnvironment: Patient, hospital, staffActuators: Screen display (questions, tests,

diagnoses, treatments, referrals)Sensors: Keyboard (entry of symptoms,

findings, patient's answers)

PEASAgent: Part-picking robotPerformance measure: Percentage of parts

in correct binsEnvironment: Conveyor belt with parts, binsActuators: Jointed arm and handSensors: Camera, joint angle sensors

PEASAgent: Interactive English tutorPerformance measure: Maximize student's

score on testEnvironment: Set of studentsActuators: Screen display (exercises,

suggestions, corrections)Sensors: Keyboard

Environment types Fully observable vs. Partially observable : An agent's

sensors give it access to the complete state of the environment at each point in time. An environment is fully observable if the sensors detect all aspects that are relevant to the choice of action. Environment might be partially observable because of noisy and inaccurate sensors.

Deterministic vs. Stochastic : The next state of the environment is completely determined by the current state and the action executed by the agent. (If the environment is deterministic except for the actions of other agents, then the environment is strategic)

Environment typesEpisodic vs. Sequential (tindakan)The agent's

experience is divided into atomic "episodes" (each episode consists of the agent perceiving and then performing a single action), and the choice of action in each episode depends only on the episode itself.-- Ex. classification (episodic), chess and taxi driving (sequential).

Static (tanpa waktu) vs. Dynamic (dengan waktu): The environment is unchanged, the agent doesn’t need to keep looking on the environment to decide something. On Dynamic environments are continuously asking the agent what it wants to do. (The environment is semidynamic if the environment itself does not change with the passage of time but the agent's performance score does)

Discrete vs. Continuous: A limited number of distinct state, clearly defined percepts and actions. (e.g., chess – distinct, taxi driving – continuous).

Single agent vs. Multi agent: An agent operating by itself in an environment. Competitive vs Cooperative agents

Environment typesChess with Chess without Taxi driving a clock a clock

Fully observable Yes Yes No Deterministic Strategic Strategic No Episodic No No No Static Semi Yes No Discrete Yes Yes NoSingle agent No No No

• The environment type largely determines the agent design• The real world is (of course) partially observable, stochastic,

sequential, dynamic, continuous, multi-agent

The Structure of AgentsAn agent is completely specified by the

agent function mapping percept sequences to actions

One agent function (or a small equivalence class) is rational

Aim: find a way to implement the rational agent function concisely

Table-lookup agentKeeps track of percept sequence and index

it into a table of actions to decide what to do.

Drawbacks:Huge tableTake a long time to build the tableNo autonomyEven with learning, need a long time to learn

the table entries

Agent program for a vacuum-cleaner agent

Agent typesFour basic types in order of increasing generality:Simple reflex agents: select action based on

current percept, ignoring the rest of percept history.Model-based reflex agents: maintain internal

states that depend on the percept history, reflects some unobserved aspects of the current state.

Goal-based agents: Have information about the goal and select the action to achieve the goal

Utility-based agents: assign a quantitative measurement to the environment.

Learning agents: learning from experience, improving performance

Simple reflex agents

Condition-action rule: condition that triggers some actionex. If car in front is braking (condition) then initiate braking (action)

Connect percept to actionSimple reflex agent is simple but has very

limited intelligenceCorrect decision can be made only in fully

observable environment

Simple reflex agentsfunction SIMPLE-REFLEX-AGENT (percept) return an

actionstatic: rules, a set of condition-action rulesstate INTERPRET-INPUT (percept)rule RULE-MATCH(state,rules)action RULE-ACTION[rule]

return action

o INTERPRET-INPUT function generates an abstracted description of the current state from the percept

o RULE-MATCH function return the first rule in the set of rules that matches the given state description

Model-based reflex agents

The most effective way to handle partial observability is keep track of the part of the world it can see now.

Agent maintains internal state that depend on percept history and thereby reflects some unobserved aspect of the current state.

Updating internal state information requires two knowledges:How the world evolves independently of the agentHow the agents’s own action affect the world

Knowledge about “how the world works” is called a model

Model-based reflex agentsfunction REFLEX-AGENT-WITH-STATE (percept) return

an actionstatic: state, a description of the current world staterules, a set of condition-action rulesaction, the most recent action, initially nonestate UPDATE-STATE (state,action,percept)rule RULE-MATCH(state,rules)action RULE-ACTION[rule]

return action

o UPDATE-STATE creates new internal state

Goal-based agents

Knowing about the current state is not always enough to decide what to do.

The agent needs a goal information that describe situations that are desirable

Utility-based agents

Achieving the goal alone are not enough to generate high-quality behavior in most environments.

How quick? How safe? How cheap? measured

Utility function maps a state onto a real number (performance measure)

Learning agents

Learning element is responsible for making improvements

Performance element is responsible for selecting external actions

Critic gives feedback on how the agent is doing

To Sum Up Agents interact with environments through actuators and

sensors The agent function describes what the agent does in all

circumstances The performance measure evaluates the environment

sequence A perfectly rational agent maximizes expected performance Agent programs implement (some) agent functions PEAS descriptions define task environments Environments are categorized along several dimensions: observable? deterministic? episodic? static? discrete? single-

agent? Several basic agent architectures exist: reflex, reflex with state, goal-based, utility-based